AI computing is becoming more specialized. Instead of relying on just one type of chip (like a GPU), tech giants are now assembling custom silicon stacks to handle the rising complexity and scale of AI. This is especially critical as we move from generative AI—where the focus is on producing content—to Agentic AI, where systems must reason, make decisions, and act independently across continuous workflows.

XPUs and custom ASICs are emerging as key enablers of this new phase, delivering the speed and efficiency needed for real-time, autonomous inference. They’re not replacing GPUs—but augmenting them—powering a new generation of AI that doesn’t just create, but gets things done.

From Generative to Agentic AI: A New Compute Frontier

Just as the world was beginning to grasp the potential of generative AI—models that churn out text, images, and code—a more ambitious wave is quietly building: Agentic AI.

These aren’t just systems that create; they think, plan, and act. An Agentic AI doesn’t wait for your next prompt. It breaks down goals into subtasks, interacts with tools and APIs, monitors its own progress, and dynamically adjusts course—autonomously. It’s the difference between a painter and a project manager.

And unlike static content generation, this requires relentless real-time inference, precise decision loops, and ultra-efficient hardware. The spotlight now shifts to application-specific silicon (ASICs) / XPUs —custom-built chips that can execute these tasks not just faster, but smarter. In this new AI paradigm, it’s not just about intelligence—it's about execution at scale.

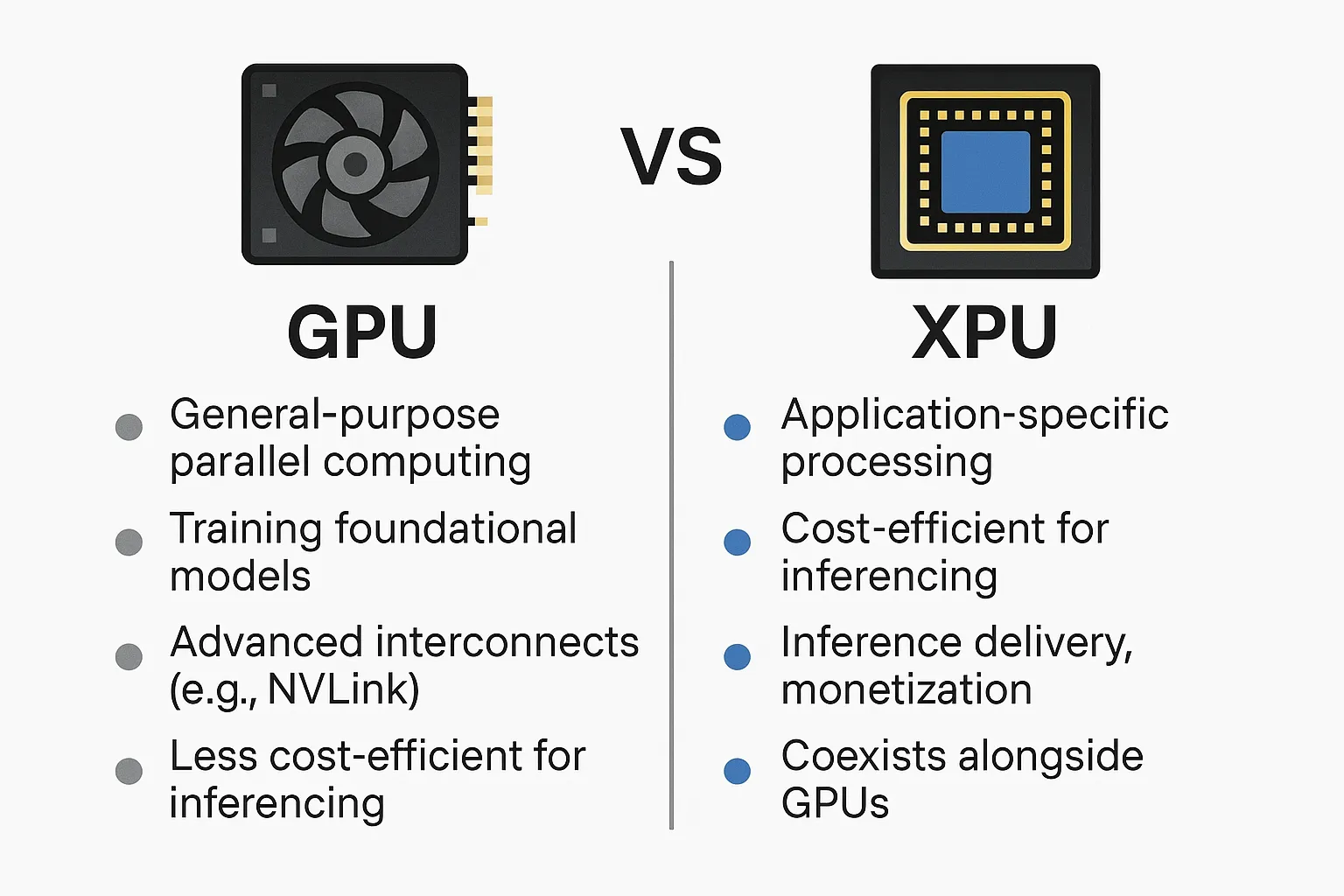

What Are GPUs and XPUs Anyway?

Think of GPUs as the all-rounders of computer chips. They’re good at doing lots of things at once and are used heavily in building (or "training") AI models, like ChatGPT or image generators.

XPUs, on the other hand, are more like specialists. They’re designed to do just one thing very well—like answering AI questions, running smart assistants, or handling video recommendations. These chips go by different names—TPUs, DPUs, or just "custom AI chips"—but they all aim to do specific jobs fast and efficiently.

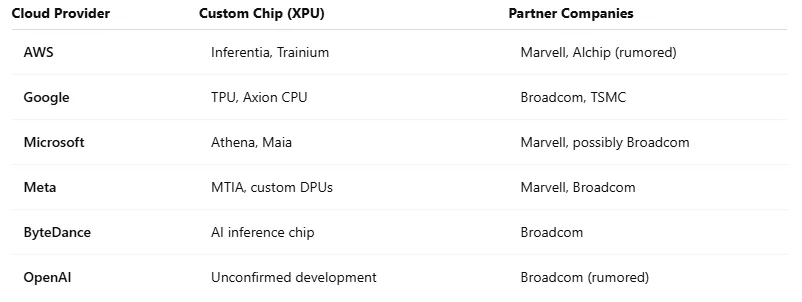

Who’s Winning in This New AI Chip Race?

- Marvell: Co-developing chips with AWS, Microsoft, and Meta.

- Broadcom: Supplying AI chip tech to Google, Meta, and others.

- TSMC: Building nearly all these chips in its factories.

- Synopsys & Cadence: Providing tools to design these chips.

Why Are We Talking About XPUs Now?

Because the big tech companies (called hyperscalers) like Google, Amazon, and Microsoft are all making or using these XPUs to save money and improve performance. These chips are:

- Cheaper to run for specific tasks

- More energy-efficient

- Built for real-time AI uses, like chatbots or video recommendations

Training AI models with GPUs is expensive and slow. But once the AI is trained, XPUs take over to deliver fast, cost-effective results to millions of users.

Nvidia’s Big Shift: Working With XPUs

Nvidia, the top GPU maker, recently introduced something called NVLink Fusion. It’s a way for their chips to work better with XPUs from other companies. This is a big change: instead of doing everything alone, Nvidia is helping different types of chips work together.

This move shows that even Nvidia sees the future as a team sport—with GPUs handling the complex model training, and XPUs running the everyday, money-making AI services.

What’s the Difference Between Training and Inference?

- Training is teaching the AI how to think. It takes a long time and lots of expensive GPUs.

- Inference is when the AI uses what it’s learned to give you answers or suggestions. It happens quickly, many times a second.

Inference is where the money is made—when you ask Siri a question, or YouTube suggests a video, or a chatbot answers you. This is where XPUs shine.

The Big Picture

AI computing is becoming more specialized. Instead of just using one type of chip (like a GPU), companies are building custom chip teams to handle specific jobs faster and cheaper.

XPUs aren’t here to replace GPUs—but to work alongside them. They’re making AI services more scalable, efficient, and profitable. As this shift happens, companies like Marvell, Broadcom, and TSMC are becoming critical players behind the scenes.

%20(29).jpg)

%20(27).jpg)

.jpg)

.png)