Over the last two years, Nvidia has firmly established itself as the backbone of the artificial intelligence revolution. Its dominance in training large AI models is widely understood and already reflected in both earnings growth and market valuation. However, the next phase of AI adoption is not just about building ever-larger models. It is about serving AI to millions of users, in real time, at an economically sustainable cost.

Viewed through this lens, Nvidia’s decision to integrate Groq’s inference technology and senior leadership into its broader ecosystem should be seen as a strategic extension of its long-term architecture, rather than a standalone acquisition or a deviation from its core GPU strategy.

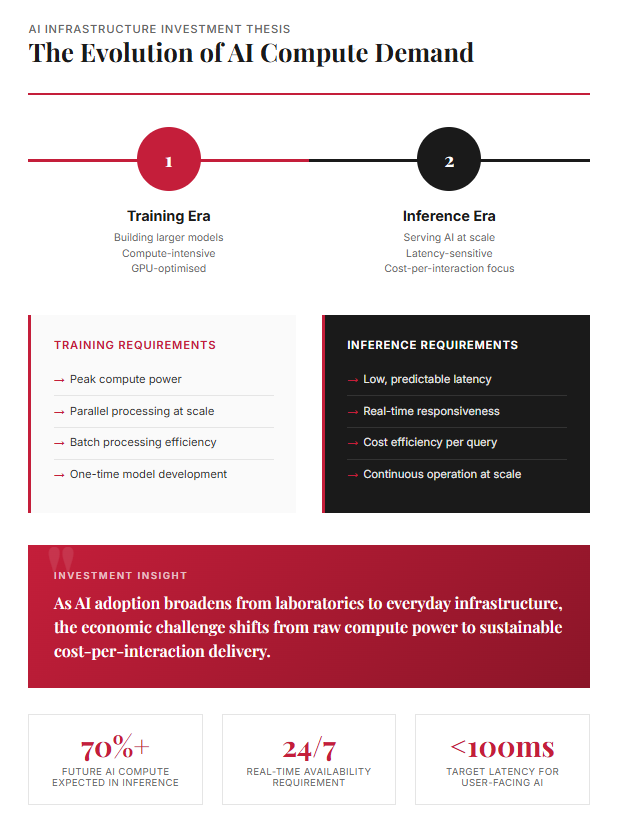

From Training to Inference: Where the Next Growth Lies

The first wave of AI investment was driven by training. This is a compute-intensive process, well suited to Nvidia’s high-performance GPUs, and it remains a critical part of the ecosystem.

The second wave, which is now gaining momentum, is fundamentally different. AI is increasingly used in interactive, real-time applications such as chat interfaces, voice assistants, enterprise copilots, and autonomous agents. In these use cases, the model has already been trained; the challenge is how quickly and reliably it can respond to individual user requests.

This stage, known as inference, is expected to:

- represent a larger and more durable source of AI demand over time, and

- shift customer focus away from peak compute power toward latency, predictability, and cost per interaction.

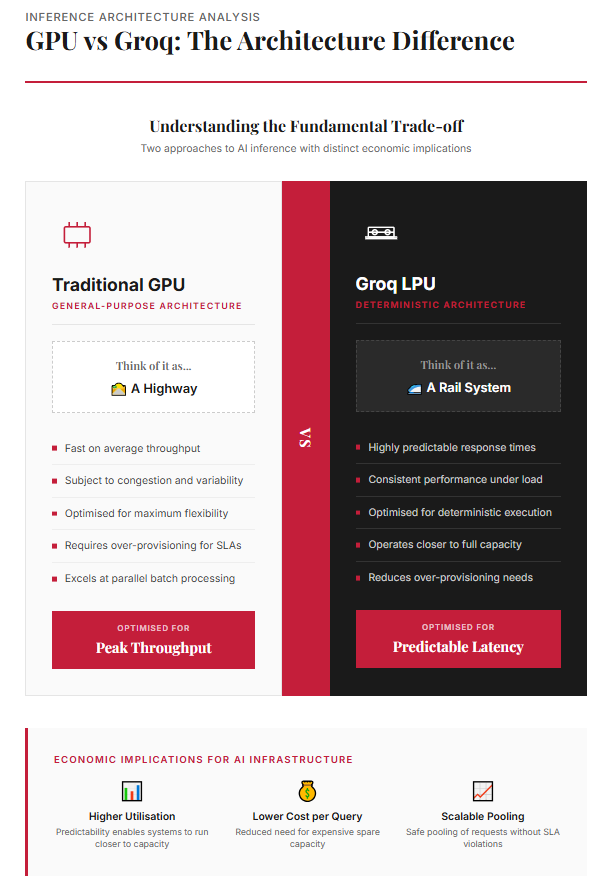

The Structural Challenge With General-Purpose GPUs

Nvidia’s GPUs are exceptional general-purpose processors. They are most efficient when handling large volumes of work that can be processed in parallel. However, many real-world AI interactions arrive one request at a time and require near-instant responses.

This creates a structural tension. To meet strict response-time guarantees, AI service providers often need to keep spare GPU capacity available at all times. While this ensures a good user experience, it raises the cost of serving AI, particularly for low-batch or batch-size-one workloads.

In simple terms:

- GPUs excel at throughput

- Real-time inference increasingly demands predictability

As AI adoption broadens, this inefficiency becomes an economic issue rather than a technical one.

What Groq Brings: Predictability and Cost Control

Groq’s inference technology is built around a different design philosophy. Rather than optimizing for maximum flexibility, it is optimized for deterministic execution, meaning that response times are highly predictable.

This predictability matters because it allows AI systems to:

- operate closer to full capacity,

- reduce the need for costly over-provisioning,

- and deliver more consistent response times under load.

A useful analogy is to think of traditional GPU-based inference as a highway - fast on average, but subject to congestion, while Groq’s approach is closer to a scheduled rail system, with fewer routes but reliable arrival times.

For latency-sensitive, user-facing AI applications, this distinction has direct economic implications.

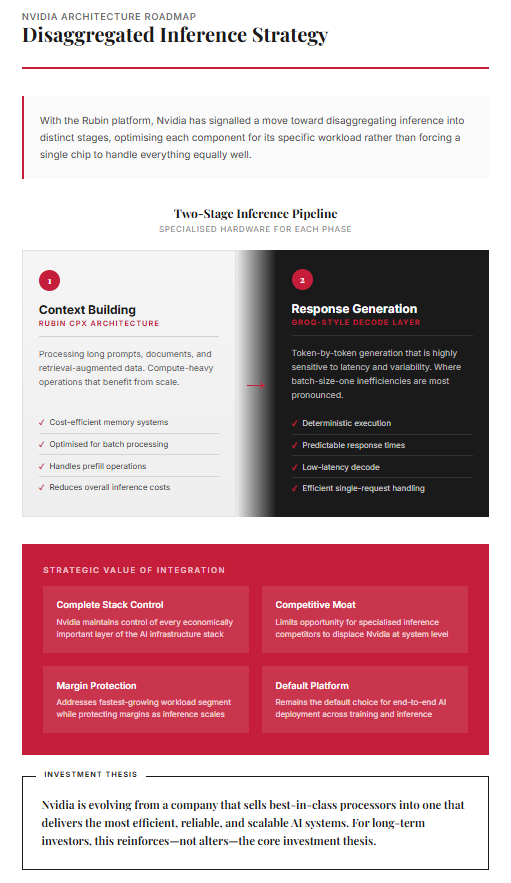

The Key Architectural Insight: Disaggregated Inference

The strategic importance of Groq becomes clearer when viewed alongside Nvidia’s next-generation roadmap.

With the Rubin platform, Nvidia has already signaled a move toward disaggregating inference into distinct stages, rather than forcing a single chip to handle everything equally well. In particular:

- Context building / prefill

This phase involves processing long prompts, documents, or retrieval-augmented data. It is compute-heavy and benefits from scale. Nvidia’s Rubin CPX architecture is optimized for this stage, using more cost-efficient memory systems to reduce overall inference costs.

- Response generation / decode

This phase produces tokens one by one and is highly sensitive to latency and variability. It is also where batch-size-one inefficiencies are most pronounced.

Groq’s technology aligns naturally with this second stage.

Rather than replacing GPUs, a Groq-style inference engine can function as a dedicated, low-latency decode layer, sitting alongside Rubin and Rubin CPX within a broader Nvidia system.

Why This Matters for Batch-Size-One and Future Pooling

Today, batch-size-one inference is expensive because systems must be sized for worst-case response times. As AI adoption increases, this problem does not automatically disappear; in fact, strict service guarantees often prevent effective pooling of workloads.

A deterministic decode layer changes this dynamic. By offering predictable response times, it allows providers to:

- pool many low-latency requests safely,

- increase utilization without violating service guarantees,

- and lower the cost per token delivered.

In this sense, Groq’s technology becomes more valuable as AI usage scales, not less. It helps solve the economic challenge of delivering AI at scale, not just the technical one.

Financial Implications for Nvidia

From an investment perspective, the significance of this move lies in its impact on long-term economics rather than near-term revenues.

Integrating Groq’s inference capabilities strengthens Nvidia’s ability to:

- address the fastest-growing segment of AI workloads,

- protect margins as inference volumes scale,

- and remain the default platform for end-to-end AI deployment.

Importantly, this also limits the opportunity for specialized inference competitors to displace Nvidia at the system level.

What This Is—and Is Not

This development should be understood clearly:

- It is not a replacement for Nvidia’s GPUs

- It is not a shift away from Nvidia’s core architecture

- It is a reinforcement of Nvidia’s strategy to control every economically important layer of the AI stack

As with networking, software, and systems integration before it, this is Nvidia identifying a future bottleneck and addressing it early.

Bottom Line for Investors

Nvidia is evolving from a company that sells best-in-class processors into one that delivers the most efficient, reliable, and scalable AI systems.

The integration of Groq’s inference technology completes an important part of that vision. It strengthens Nvidia’s position as AI moves from training laboratories into everyday infrastructure and helps ensure that growth in AI usage translates into sustainable long-term profitability.

For long-term investors, this reinforces, not alters the core Nvidia investment thesis.

%20(29).jpg)

%20(27).jpg)

.jpg)

.png)