Google’s latest TPU v7 chip marks a major inflection point in the AI hardware race, closing the longstanding performance gap with NVIDIA’s cutting-edge GPUs. With comparable compute power (in the range of 4.5–4.6 peta FLOPS), similar high-bandwidth memory capacity (192 GB of HBM3e), and impressive scalability across thousands of chips, TPU v7 demonstrates that Google can now compete toe-to-toe on raw specifications. This is a significant leap forward, especially considering that prior TPU generations often lagged behind NVIDIA’s offerings. Moreover, TPUs offer a clear Total Cost of Ownership (TCO) advantage in many large-scale deployments, often delivering similar or better throughput at 30–50% lower cost when factoring in chip pricing, power efficiency, and infrastructure design.

Yet despite this hardware catch-up, NVIDIA retains several enduring advantages that continue to secure its dominance. Chief among them is its mature and widely adopted CUDA software ecosystem, which includes a vast array of optimized libraries, developer tools, and community support. This makes NVIDIA GPUs accessible not just to large AI labs with deep engineering teams, but also to startups, researchers, and enterprises without the capacity to retool software stacks for alternative silicon. By contrast, platforms like Google’s TPUs (or Amazon’s Trainium) require more specialized engineering effort to unlock their full potential, a hurdle that limits adoption to highly technical teams with the bandwidth to invest in custom tooling and optimization.

Furthermore, even as rivals close in on one generation of hardware, NVIDIA’s superior product cadence ensures it doesn’t stay caught for long. With new GPUs arriving roughly every 12–18 months and intermediate upgrades in between, NVIDIA has consistently stayed one step ahead. What truly sets NVIDIA apart now, however, is its innovation in disaggregating inference workloads: separating the compute-heavy “prefill” phase from the memory-intensive “decode” stage and assigning them to distinct hardware components. The introduction of RubinCPX, a compute-optimized chip tailored specifically for prefill, allows the company to dramatically reduce inference costs by offloading this work from its more expensive, memory-rich GPUs. This shift represents more than just efficiency, it signals a new architectural paradigm for serving large language models, one that could reshape how inference infrastructure is built.

In essence, while Google’s TPUs have proven they can match and in some cases beat NVIDIA on cost and performance for certain high-scale use cases, NVIDIA’s broad utility, unmatched software stack, and forward-thinking hardware strategies continue to give it the upper hand. Its ability to serve everyone from cloud giants to solo researchers, and to continually re-establish technical leadership with each generation, positions it not just as the current leader in AI infrastructure but as the one best equipped to define its next frontier.

Google’s Big Leap in Performance

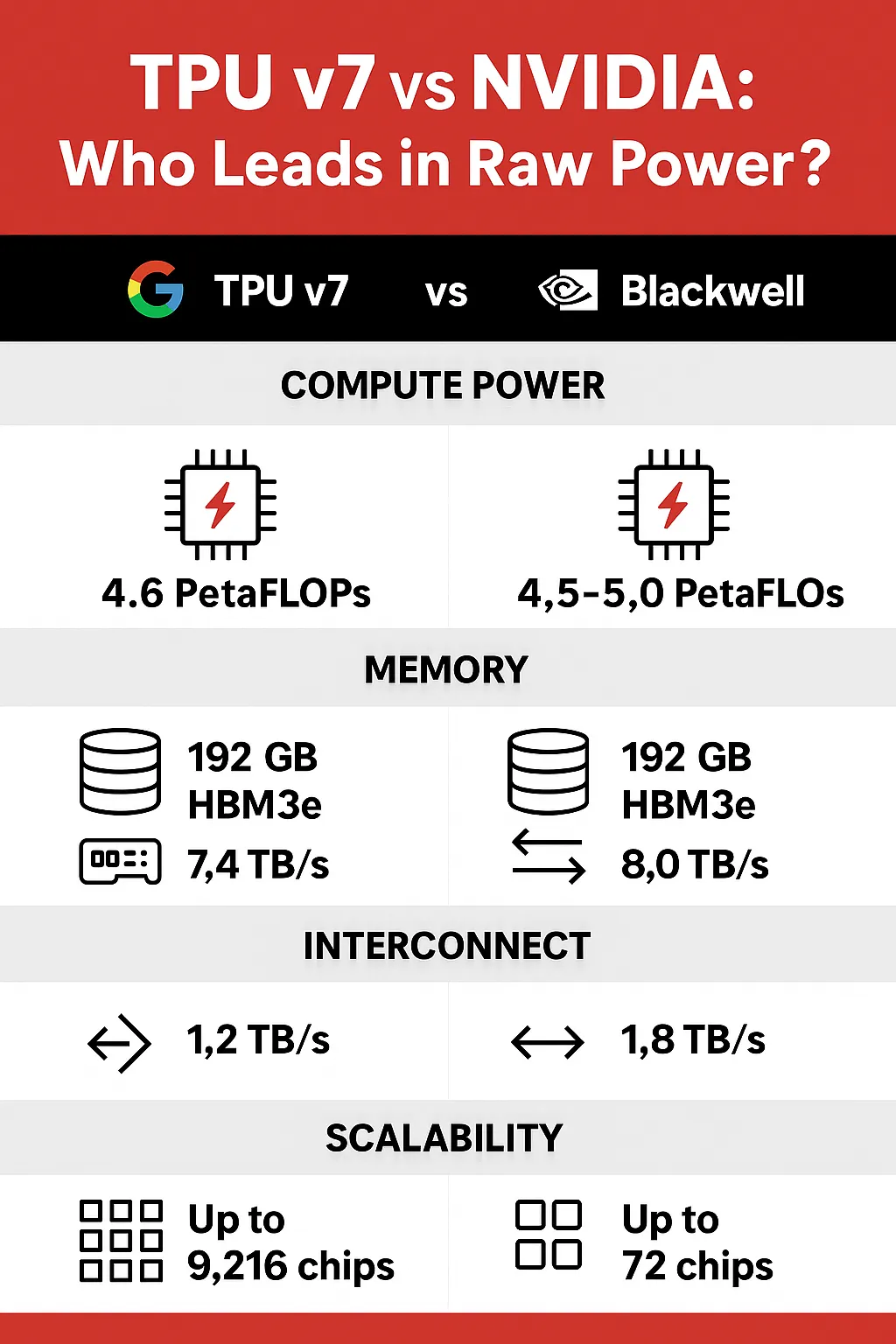

Google's TPU v7 is its most powerful AI chip yet. It delivers roughly 4.6petaFLOPS of compute power — matching or slightly beating NVIDIA’s Blackwell B200 GPU, and coming close to its more powerful siblings like the GB200 andGB300. It also offers 192 GB of high-speed memory (HBM3e) and top-tier bandwidth of ~7.4 TB/s. For context, NVIDIA’s B200 offers similar memory specs and slightly higher bandwidth (~8 TB/s), while the older H100 lags behind in both compute and memory.

One of Google’s biggest advantages is scale. TPU v7 chips can be linked into massive clusters — up to 9,216 chips in a single system — allowing them to train some of the largest models in the world. NVIDIA, by comparison, offers dense GPU racks like the NVL72 (72 GPUs), but achieving TPU-scale requires more complex networking.

Lower Cost and Better Energy Efficiency

Where TPUs really shine is cost. Google co-designs these chips with Broadcom and gets them at lower manufacturing margins, unlike NVIDIA, which sells its chips at high markups — sometimes 70–80%. This cost advantage means Google can offer TPU compute to customers (like Anthropic) for up to 30–50% less than NVIDIA GPUs, depending on utilization.

Power consumption is another win. TPU v7 delivers roughly 2.8× better performance per watt than NVIDIA’s H100, and still beats the newer Blackwell GPUs in energy efficiency. Over time, that can translate into millions saved on electricity and cooling in large data centers.

Purpose-Built Design and Scale Efficiency

TPUs are designed specifically for AI workloads. Unlike general-purpose GPUs, TPUs focus on dense matrix math — the heart of machine learning. This means they can be more efficient at running large language models when properly optimized. In real-world use, a well-tuned TPU cluster can outperform GPUs with higher peak specs because it stays busier and wastes less time.

Moreover, Google’s architecture allows for massive scale-up: fewer duplicated systems and more streamlined infrastructure. A TPU pod can house thousands of chips, all working in sync. NVIDIA’s systems are catching up in density but still rely on smaller GPU nodes connected over network switches.

The Software Advantage: Why NVIDIA Still Leads

Despite Google's hardware and cost advantages, NVIDIA’s ecosystem gives it a huge edge. Its CUDA software platform is deeply embedded in the AI community, offering tools, libraries, and support that make it easy to develop and deploy models.

Moving from NVIDIA to Google’s TPU stack often means rewriting large parts of your code — a major barrier for smaller teams. NVIDIA’s ecosystem supports a broad range of use cases out of the box, while TPU performance often requires extensive engineering effort to reach full potential.

Disaggregating Inference: NVIDIA’s Next Big Bet

One of NVIDIA’s smartest recent moves is in how it handles AI inference (running models after training). Inference has two parts:

- Prefill: The compute-heavy step where the input is processed.

- Decode: The memory-heavy step where the model generates output one token at a time.

NVIDIA’s upcoming Rubin architecture introduces a new chip, the Rubin CPX, specifically for the prefill stage. This chip uses cheaper memory and focuses purely on raw compute. The decode part remains on the main, high-memory GPUs like Rubin or Blackwell.

By splitting inference this way, NVIDIA makes its hardware more efficient. Each chip is used for what it’s best at, which lowers the total cost of serving AI responses. These new Rubin racks will combine 72 high-end GPUs with 144 CPX chips to maximize performance and reduce wasted resources. Customers also have the choice of adding just the Rubin CPX inference chip, if the prefill-decode intensity begins to shift even further in favor of prefill, essentially providing another layer of flexibility to customers.

Early projections suggest this setup could rival or even beat TPU pods in inference cost, especially for long or high-volume workloads. It also reinforces NVIDIA’s position — offering competitive cost and performance without sacrificing the developer experience that CUDA provides.

%20(29).jpg)

%20(27).jpg)

.jpg)

.png)