Why the world is short of “memory,” how the hierarchy is being re-built, and who stands to benefit

The next phase of the AI build-out is no longer constrained by compute alone. It is increasingly constrained by memory. The ability to store, move, and retrieve data fast enough and cheaply enough to keep AI systems productive at scale.

As AI usage shifts from episodic model training toward always-on inference, the industry is facing an unprecedented demand–supply imbalance across the entire memory stack. This imbalance is being driven not just by faster models, but by an explosion in state: longer context windows, retrieval-augmented generation, persistent AI memory, and the massive volumes of AI-generated content that must be stored, indexed, and accessed.

To address this, the industry is rapidly re-architecting the memory hierarchy, tier by tier:

- On-chip and accelerator memory (HBM, SRAM) has become the primary bottleneck, with supply tightly constrained as AI accelerators absorb a disproportionate share of advanced memory capacity.

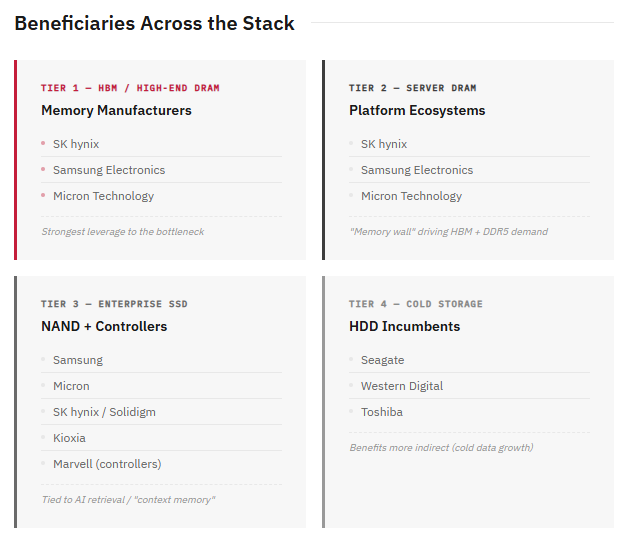

Primary beneficiaries: SK hynix, Samsung Electronics, Micron Technology.

- System memory (server DRAM) is being indirectly squeezed as manufacturers prioritize HBM and premium AI memory, tightening supply across conventional DRAM markets.

Primary beneficiaries: the same leading DRAM suppliers, with rising attach rates in AI-centric systems.

- Warm “context memory”, a term recently highlighted by Nvidia’s CEO Jensen Huang, is emerging as a critical new tier. This layer relies on enterprise SSDs (NAND flash–based) to store and retrieve large volumes of AI context—such as retrieval databases, embeddings, and prior conversation history—without forcing everything into expensive HBM.

Primary beneficiaries: Samsung, Micron, SK hynix / Solidigm, Sandisk, Kioxia, alongside key controller providers such as Marvell.

- Cold storage (HDDs) continues to play a role in archiving and long-term retention of AI-generated data, though it sits outside the performance-critical path.

Primary beneficiaries: Seagate, Western Digital, Toshiba (HDD business).

The central investment insight is that AI is becoming memory-limited rather than compute-limited. While GPUs remain essential, the economic and physical constraints of memory, especially high-bandwidth and fast-access tiers are now dictating system design, capital allocation, and supply-chain dynamics.

This shift creates a rare situation in which multiple layers of the memory ecosystem benefit simultaneously, making memory one of the most compelling structural themes within the broader AI opportunity set.

Why there is a memory shortage in the first place

The “shortage” is not one single product running out. It is a stack-wide imbalance created by three forces:

1)AI shifts memory demand from “commodity” to “specialty.”

High-bandwidth memory (HBM) is now the centerpiece of AI accelerators, and suppliers are prioritizing it because it is far more profitable. This crowds out capacity that previously went to conventional DRAM used in PCs and smartphones—something Samsung and SK hynix have explicitly warned about.

2)Supply can’t ramp quickly.

Memory manufacturing is capital-intensive and slow to expand. Even when suppliers boost capex, new capacity takes years to show up. Industry commentary increasingly frames this as a multi-year constraint.

3)Inference scales “state” and “content,” not just compute.

As AI usage spreads, we are not just training models—we are running them continuously. That creates an explosion of:

- conversation histories,

- retrieval databases (vector stores),

- logs and compliance records,

- model versions and variants,

- and AI-generated content that must be stored and indexed.

So even if training becomes a smaller share of AI spend over time, the amount of stored data and retrievable context can keep rising.

The memory hierarchy: why tiering matters

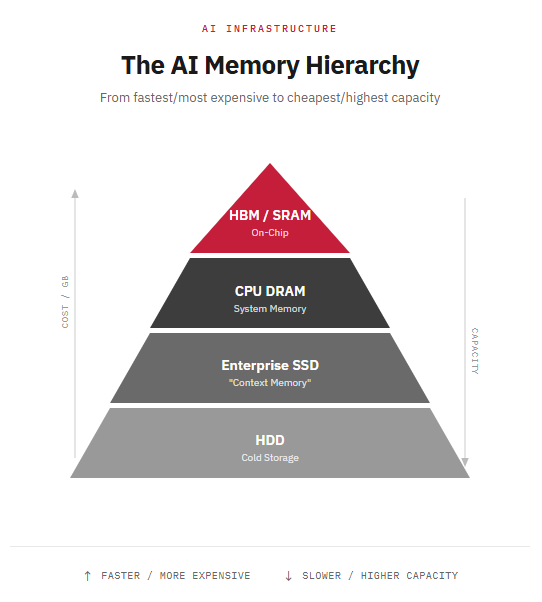

A useful way to understand this is to think of AI infrastructure as a memory pyramid—with different tiers solving different problems.

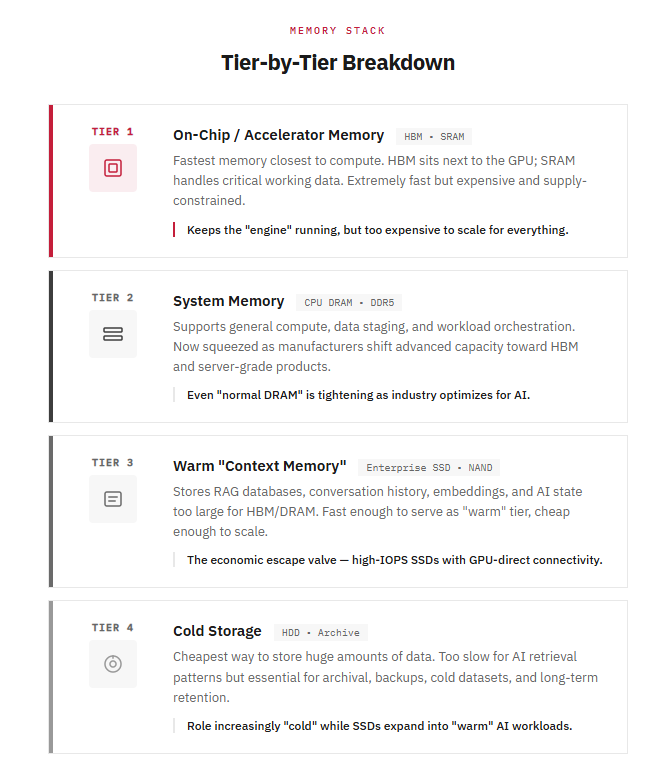

Tier1: On-chip / accelerator memory (HBM and SRAM)

This is the fastest memory closest to the compute engine. It is what allows AI accelerators to work efficiently.

- HBM (High Bandwidth Memory): extremely fast, sits next to the GPU, but expensive and supply constrained.

- SRAM (on-chip memory): even faster, used for tiny but critical working data.

This tier is where the pressure is most visible: HBM is pulling capital and wafer capacity toward AI, tightening the rest of DRAM supply.

Key takeaway: HBM/SRAM keep the “engine” running, but they are too expensive to scale for everything.

Tier2: System memory (CPU DRAM)

This is the next layer—still fast, but not as close to the accelerator. It supports:

- general compute,

- data staging,

- and workload orchestration.

This tier is now being squeezed as manufacturers shift advanced capacity toward HBM and server-grade products, contributing to broader shortages and pricing pressure.

Key takeaway: Even “normal DRAM” is tightening because the industry is optimizing for premium AI demand.

Tier3: Warm tier “context memory” (enterprise SSDs / eSSDs, NAND-based)

This is the tier Jensen Huang has been implicitly pointing toward: AI systems need away to store and retrieve large amounts of “context” without forcing everything into expensive HBM.

In practical terms, “context memory” refers to the data AI systems may need to retrieve during inference—such as:

- large retrieval databases (RAG),

- prior conversation history,

- embeddings and indexes,

- and AI state that is too large to keep permanently in HBM/DRAM.

This is where enterprise SSDs become strategically important. They are fast enough to serve as a “warm” tier and cheap enough to scale in capacity.

Nvidia’s CES messaging emphasized Rubin as a platform and also highlighted storage as a component of the broader system.

Separately, Kioxia has openly discussed (with Nvidia) a roadmap toward ultra-high-IOPSSSDs directly connected to GPUs—explicitly targeting AI workloads that involve many small, fast reads.

Key takeaway: “Context memory” is the economic escape valve—moving some AI state and retrieval workloads onto fast flash rather than scaling HBM indefinitely.

Tier4: Cold storage (HDDs)

HDDs remain the cheapest way to store huge amounts of data, but they are too slow for many AI retrieval patterns.

They still matter for:

- archival,

- backups,

- cold datasets,

- and long-term retention.

Key takeaway: HDDs remain relevant, but their role is increasingly “cold,” while SSDs expand into “warm” AI-accessed datasets.

How tiering solves the problem

The reason tiering is becoming essential is that AI is now constrained by memory economics:

- If you try to keep too much in HBM, costs explode.

- If you keep too much on HDD, retrieval becomes too slow.

- If you place the right data in the right tier, you get a system that is both fast and economical.

The industry’s direction is to keep:

- the hottest, active data in HBM/SRAM,

- the working system layer in DRAM,

- the retrieval and large context layer in SSD flash,

- and the archive on HDD.

This is precisely why “high-performance SSD” discussions (IOPS, latency, GPU-direct connectivity) are suddenly strategic rather than incremental.

Who stands to benefit: winners across the tiers

Tier1 winners: HBM / high-end DRAM

These companies have the strongest leverage to the bottleneck:

- SK hynix (HBM leader in many market discussions)

- Samsung Electronics

- Micron (expanding capacity; highlighting shortage-driven investment)

Tier2 winners: server DRAM / platform ecosystems

- Same DRAM makers (above), plus system/platform beneficiaries that drive DRAM attach rates.

Trend Forcehas been explicit that AI is driving a “memory wall” dynamic—pushing demand for HBM and DDR5 and tightening supply.

Tier3 winners: enterprise SSD (NAND + controllers + system integration)

NAND manufacturers with enterprise SSD scale:

- Samsung

- Micron

- SK hynix / Solidigm

- Kioxia (notably visible in AI SSD roadmap discussions with Nvidia)

- Sandisk

Controllers/ enabling silicon (often overlooked):

- Marvell (enterprise SSD controllers are a critical enabler of higher performance flash systems)

Tier4 winners: HDD cold-storage incumbents

- Seagate

- Western Digital

- Toshiba (HDD business)

Important nuance: HDD benefits are more indirect (cold data growth), while SSD benefits are more directly tied to “context memory” and AI retrieval workloads.

Bottom line for investors

The market narrative is shifting from “AI needs more compute” to “AI needs more memory and data movement.” That shift matters because it:

- reinforces a multi-year memory upcycle (HBM + DDR5),

- drives SSD adoption deeper into AI systems as a warm “context” tier, and

- creates a broader supply squeeze as memory makers prioritize the most profitable AI products.

The most durable takeaway is this:

Training created the AI boom. Inference scales it. And inference increasingly scales “state” and “storage,” which makes memory the bottleneck.

%20(29).jpg)

%20(27).jpg)

.jpg)

.png)

%20(3).png)